Intro to Load Balancers

An introduction to what load balancers are and how they work

This is the first of a two part series on load balancing basics, next I’ll cover the basics building a load balancer in Go.

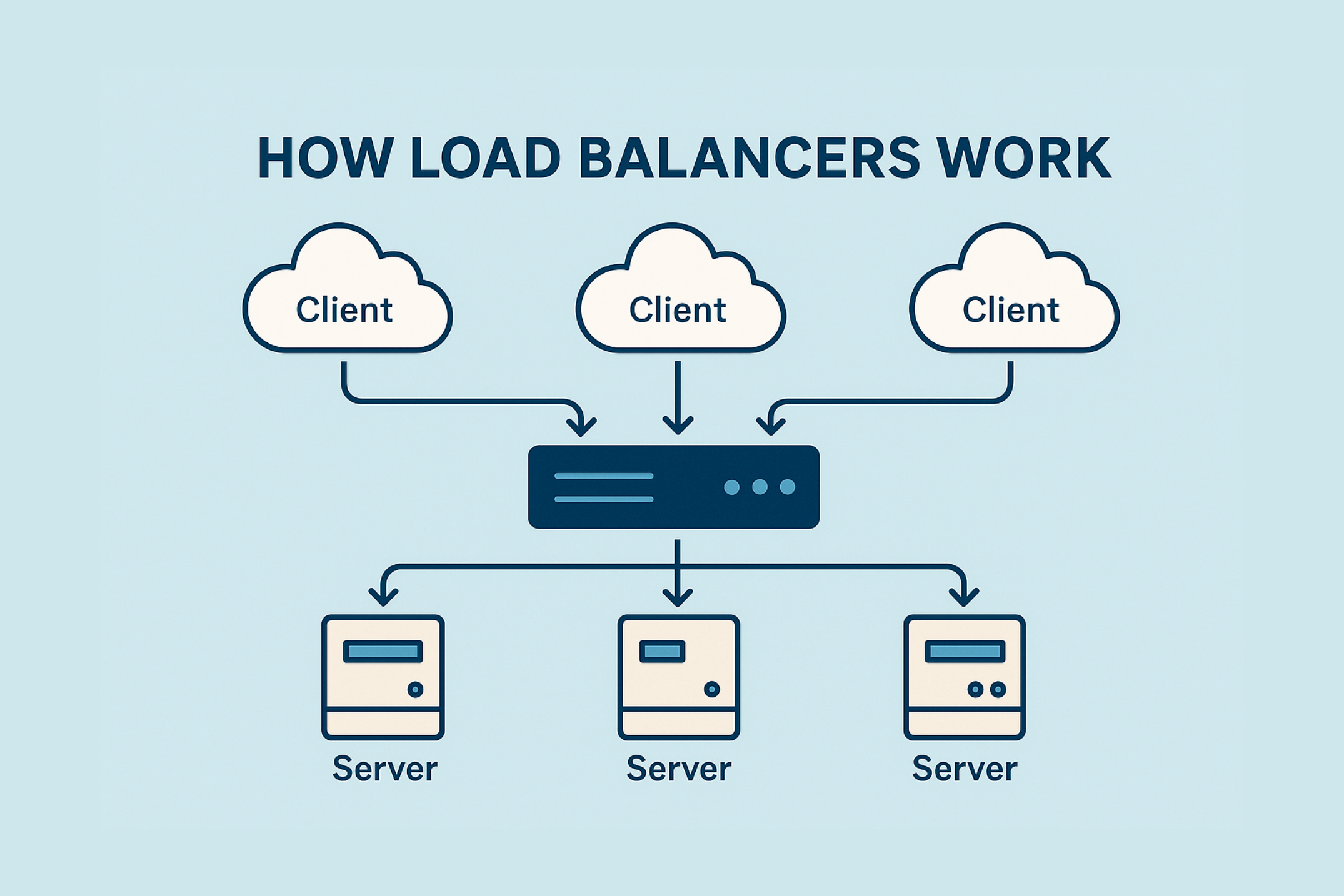

Load balancing is a foundational pillar in distributed systems, essential for delivering high availability, consistent performance, and scalable architecture. Acting much like a digital “traffic cop,” a load balancer efficiently distributes incoming network traffic or computational workloads across multiple servers or resources. This not only prevents individual servers from being overwhelmed but also ensures responsiveness and optimal resource utilization across the system.

Key Concepts of Load Balancing

What is a Load Balancer?

A load balancer is a networking device or software that distributes incoming traffic among multiple backend servers. Positioned between the client and server pool, it routes each request to the most suitable server based on predefined algorithms and real-time system conditions. Its goal is to maintain high availability and performance while preventing any single server from becoming a bottleneck.

Why is Load Balancing Important?

- Avoids Overloading: Prevents performance bottlenecks and server crashes.

- Improves Performance: Enhances response time and throughput by distributing the workload evenly.

- Ensures Fault Tolerance: Redirects traffic to healthy servers during failures to maintain uptime.

- Scales Easily: Allows seamless addition/removal of servers without downtime.

- Efficient Resource Utilization: Balances workloads to make optimal use of available server capacity.

How Load Balancers Work

When a client sends a request, it first reaches the load balancer. This intermediary then selects the most suitable backend server using predefined algorithms like round-robin or least connections. Thanks to techniques such as Network Address Translation (NAT), the client remains unaware of this middle layer, allowing for a seamless interaction.

Types of Load Balancers

Layer 4 (Transport Layer) Load Balancers

Operating at the transport layer, these load balancers work directly with IP addresses and ports using TCP or UDP. They offer high performance and low resource consumption since they don’t inspect the contents of the packets. Ideal use cases include VPNs, multiplayer game servers, or any application that doesn’t require deep traffic analysis.

Layer 7 (Application Layer) Load Balancers

Layer 7 balancers take it a step further by inspecting HTTP headers, cookies, URLs, and message bodies. This content awareness allows for advanced routing decisions, such as directing traffic based on geolocation or enabling A/B testing. They can also handle SSL/TLS termination, offloading decryption tasks from backend servers to reduce their load.

Other Load Balancer Types

Beyond the traditional models, there are hardware load balancers known for performance but limited by cost and flexibility. Software-based options are more scalable and cost-effective. Cloud-based load balancers offer ease of setup with provider-managed infrastructure, while Global Server Load Balancers (GSLB) distribute traffic across regions, improving latency and disaster recovery.

Load Balancing Algorithms

Static Algorithms

- Round Robin: Cycles requests through servers in order.

- Weighted Round Robin: Distributes traffic based on server capability weights.

- IP Hash: Routes based on hashed client IP for session consistency.

Dynamic Algorithms

- Least Connections: Picks the server with the fewest active connections.

- Least Response Time: Chooses the fastest-responding server.

- Resource-Based: Routes based on current server resource availability (e.g., CPU, memory).

Challenges in Load Balancing

Despite its benefits, load balancing introduces complexity. A single point of failure can bring systems down, which is why redundancy is essential. Configurations can become difficult to manage without regular reviews and automation. Scaling the balancer itself can be a bottleneck, and sticky sessions may lead to uneven load distribution. Latency, costs, traffic variability, and compliance requirements also need attention. Effective solutions rely on strong monitoring, real-time adaptability, and streamlined operational tools.

Scaling Strategies for Load Balancers

Scaling can be tackled horizontally by adding servers or vertically by upgrading existing ones. Auto-scaling dynamically adjusts capacity based on demand, which cuts costs and improves uptime. Blue-green deployments allow traffic to shift between environments without downtime. Meanwhile, dynamic algorithms continuously refine how traffic is routed, ensuring optimal performance.

Session Persistence (Sticky Sessions)

Sticky sessions direct a user’s repeated requests to the same server, which benefits applications that rely on session state. Load balancer-generated cookies provide automatic tracking, while applications may use custom cookie logic. Target group stickiness is useful in staged rollouts.

This setup improves user experience and caching efficiency, but it can also introduce scalability challenges and risks of uneven load. Alternatives like stateless design, client-side storage with JWTs, distributed caching, or database-backed sessions offer more flexibility.

Health Checks and Monitoring

Health checks are vital to a reliable load balancing system they identify unhealthy servers and rerouting traffic accordingly. Active checks send regular TCP, HTTP, or ICMP requests to servers. Passive checks monitor live traffic patterns for signs of failure.

You can fine-tune these checks with configurable settings like ports, paths, timeouts, and thresholds. Best practices include using redundant checks, avoiding false positives by adjusting frequency, and automating recovery. Always validate configurations in a staging environment before deployment.

Conclusion

Load balancing underpins the reliability and scalability of modern distributed systems. From basic IP-level routing to content-aware HTTP inspection, the choice of strategy and algorithm significantly impacts performance. Combined with robust health checks and scalable infrastructure, a well-designed load balancing system ensures seamless service delivery, even under high demand.